Why Reddit and Quora Stop Working for GEO (And What Actually Does)

TLDR

Reddit and Quora worked incredibly well for ChatGPT optimization two months ago. Today? Not so much. This rapid shift reveals the biggest challenge with Generative Engine Optimization: what works this month stops working next month. LLMs pull answers from two sources - training data (locked for 6-12 months) and live web searches (happening in real time). Success requires optimizing for both layers: general AI readability (schema, clear claims, front-loaded content) and domain-specific semantic signals (the specific concepts AI looks for in your niche). The agencies winning right now understand how neural networks connect entities internally and build content around those specific semantic signals.

Key takeaways:

- ChatGPT now pulls less from Reddit and Quora than it did 60 days ago

- LLMs use two sources: training time data and live grounding searches

- Missing the training window means waiting 6-12 months for the next model

- Semantic signal optimization beats platform-specific tactics every time

Everyone Is Asking About Reddit and Quora

Two months ago, Reddit and Quora were incredibly effective for influencing ChatGPT responses.

Now? ChatGPT pulls from those platforms significantly less.

This shift happened in weeks.

We get asked a lot about Reddit strategies from our clients and prospects. And while it’s important to know, we try and reframe it for them.

The better question is: how do LLMs actually create responses?

Once you understand the mechanics, you stop chasing platforms and start building sustainable optimization.

How LLMs Actually Respond to Prompts

When someone asks ChatGPT a question, the AI pulls from two distinct sources to create its answer.

Understanding these sources changes how you think about optimization.

Source 1: Training Time

When OpenAI trains GPT-5 or 5.1 or now 5.2, they ingest massive amounts of data from across the internet. This becomes the foundational knowledge baked into the model.

But here's the catch.

Once they train the model, they never retrain it. They only retrain when they build the next version - GPT-6 - which happens 6 to 12 months later.

Miss your window during training? You're invisible until the next version launches.

This is why timing matters. If your content isn't indexed during the training window, you wait another year for the next shot. No amount of Reddit posting fixes that.

Source 2: Grounding (Live Web Search)

For questions needing current information, AI performs live web searches.

When you ask "what are the best running shoes of 2025," the model needs fresh data. So it runs search queries on Bing. Gets back 50 results. Analyzes metadata. Picks the 5 to 10 most relevant sources to cite.

This happens in real time. Every search.

The process:

- User submits prompt

- AI converts prompt into search queries

- Search API returns 50 results

- AI analyzes metadata (update time, title, structure)

- AI selects 5-10 most credible sources

- AI synthesizes answer

This is where most brands focus. Post content today. Get cited this week.

But most people miss what happens inside that black box between steps 4 and 5.

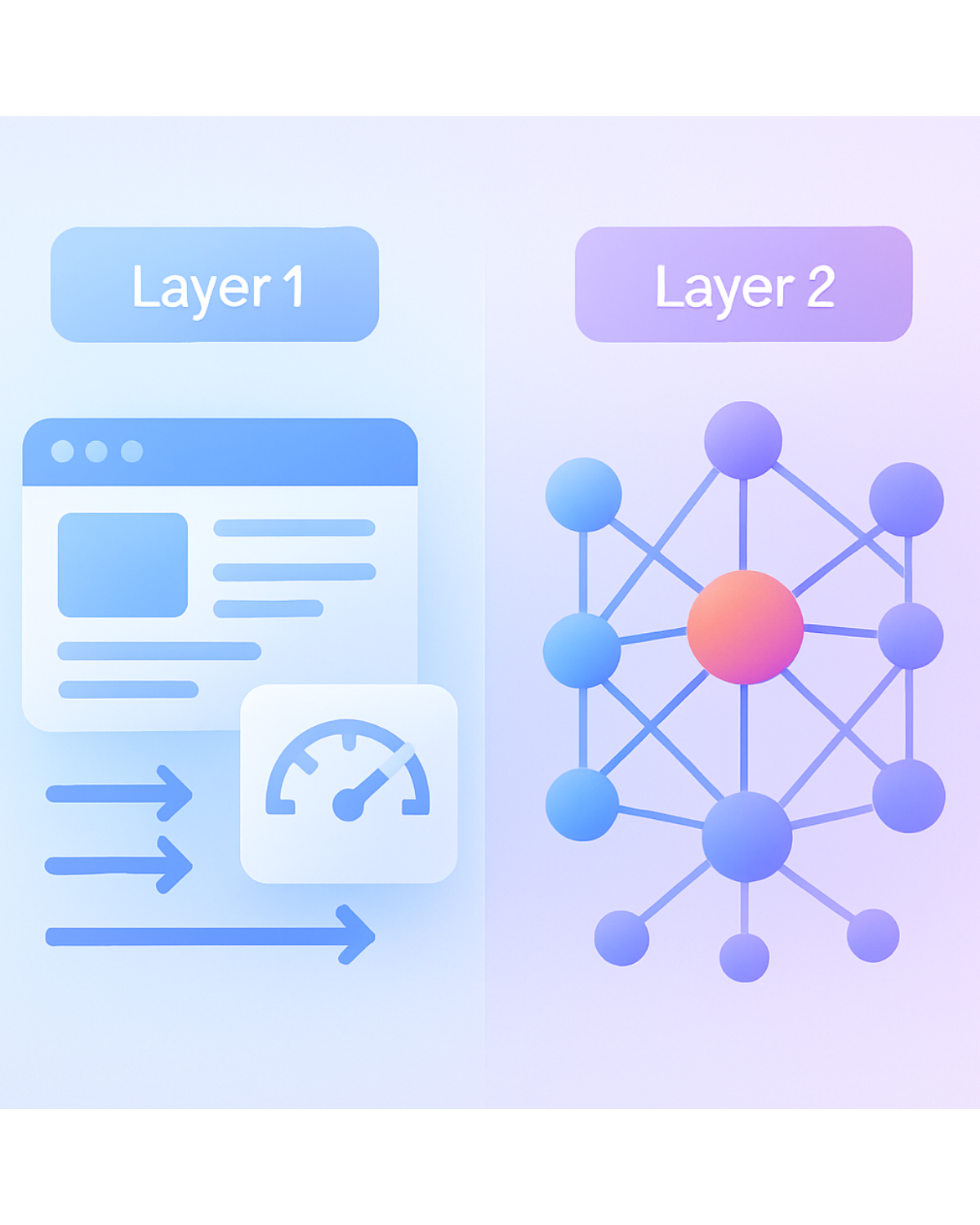

The Two Optimization Layers That Matter

GEO requires two different approaches working together.

Most agencies only focus on one. The winners optimize for both.

Layer 1: General AI Readability

These are table stakes. Every website needs them:

- Schema markup for structured data

- Clear claims backed by statistical evidence

- Logical content flow that's easy to parse

- Front-loaded content density

- Fast technical setup (AI crawlers are impatient)

Think of these as your ticket to the game. Without them, you don't get considered.

But they don't win you the game.

Layer 2: Domain-Specific Semantic Signals

This is where most platforms fail.

LLMs work as neural networks. They organize information as graphs—nodes connected by edges. Each node represents an entity or concept.

One node for Toyota. One node for affordability. One node for reliability. One node for the Camry model.

When AI parses your content, it's forming connections between these nodes. It's looking for specific semantic signals that trigger those connections.

If you understand what signals AI looks for in your domain and build them into your content, you become the authoritative source AI cites every time.

This is what we've built into Visto's platform. Our technology understands how LLMs interpret and weight information internally. We analyze which semantic signals move the needle for specific prompts in specific industries.

Why Platform Tactics Keep Failing

Here's the pattern:

Month 1: "Reddit is the answer!"

Month 2: ChatGPT changes weighting. Results drop 40%.

Month 3: "Quora is where it's at!"

Month 4: Same story.

The problem isn't the platforms. The problem is chasing platforms instead of understanding fundamentals.

When you optimize for semantic signals, you're platform-agnostic. Your content gets cited because you've built it around the concepts AI searches for.

To really get the best GEO results for your business or clients, you ned to stop asking "which platform should we post on?" and started asking "what semantic signals does our target prompt require?"

What This Means for Your GEO Strategy

Most SEO folk think in keywords. Find 5-10 high-volume terms. Optimize. Track rankings.

GEO doesn't work that way.

You're optimizing for hundreds of long-tail prompts. Each prompt triggers different semantic signals. Each signal connects different nodes in the neural network.

Instead of "rank #1 for best CRM software," you need to be cited when someone asks:

- "What CRM works best for small sales teams?"

- "Compare Salesforce vs HubSpot for B2B companies"

- "Best CRM with native email integration under $100/month"

Each prompt looks for different semantic signals. Different entities. Different graph connections.

This is why Reddit tactics fail. You need to understand how the neural network connects your brand to adjacent concepts. Then build content that strengthens those specific connections.

FAQ: LLM Optimization Questions

How often do LLMs retrain their models?

Major updates happen every 6-12 months. GPT-5 won't be retrained until GPT-6 launches. Miss the training window? You wait up to a year for another shot at the foundational knowledge base.

Does posting on Reddit still help with GEO?

It helps less than two months ago for ChatGPT. But Reddit remains valuable for some platforms. Don't rely on any single platform. Focus on semantic signals that work across all AI search engines.

What's the difference between SEO and GEO optimization?

SEO optimizes to rank #1 for keywords. GEO optimizes to be one of the 5-10 sources AI cites. Content structure and semantic signals matter more than traditional ranking factors.

Can I optimize for multiple AI platforms at once?

Yes, and you should. Platform-specific tactics fail when weighting changes. Semantic signal optimization works across ChatGPT, Perplexity, Google AI Overviews, and Gemini because you're optimizing for how neural networks fundamentally process information.

What Agencies Should Do Next

Stop chasing platforms. Start building semantic optimization.

Your next 30 days:

Identify your top 20 target prompts. Analyze which semantic signals each prompt requires. Map the entities and concepts AI connects to your brand. Build content that strengthens those connections. Track performance across multiple AI platforms.

The agencies that make this shift now will have 6-12 months of competitive advantage before the market catches up.

Book a call with our team to see how we analyze semantic signals for your specific target prompts.

.avif)